Understanding the Basics of Neural Networks

Neural networks are at the heart of many of today's most exciting technological advancements, from self-driving cars to medical diagnoses. But what exactly are they? This post will explore the fundamental concepts of neural networks, demystifying their inner workings and providing a solid foundation for further exploration. We'll delve into the core components, their interactions, and the underlying principles that drive their remarkable capabilities.

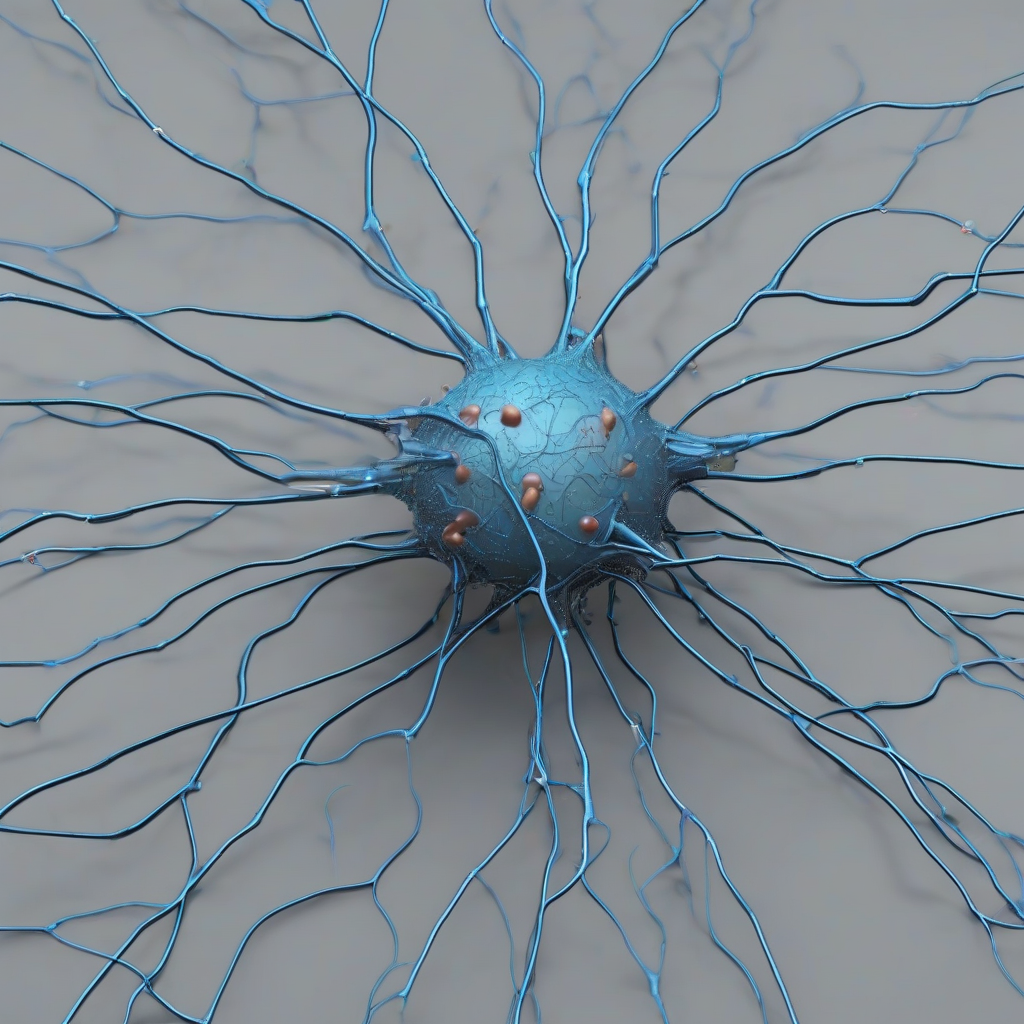

The Biological Inspiration: Neurons and Synapses

The name "neural network" itself hints at its inspiration: the human brain. Our brains consist of billions of interconnected neurons, which communicate through synapses. These synapses transmit signals, strengthening or weakening their connections based on experience. Artificial neural networks mimic this structure and function, albeit in a highly simplified manner.

Each neuron in the brain receives input signals from other neurons. If the sum of these inputs surpasses a certain threshold, the neuron "fires," sending a signal to other connected neurons. This fundamental process forms the basis of information processing in the brain.

Artificial Neurons: The Building Blocks

Artificial neural networks are composed of interconnected nodes, also known as neurons or units. These artificial neurons operate similarly to their biological counterparts. Each neuron receives input, processes it, and produces an output. This output is then passed on to other neurons in the network.

The process involves several key steps:

Weighted Inputs: Each input signal is multiplied by a weight, representing the strength of the connection between the neurons. These weights are crucial; they are adjusted during the learning process to optimize the network's performance.

Summation: The weighted inputs are summed together.

Activation Function: The sum is then passed through an activation function. This function introduces non-linearity into the network, allowing it to learn complex patterns. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent). The choice of activation function depends on the specific application and network architecture.

Output: The output of the activation function is the neuron's output, which is then passed on to other neurons in the network.

Layers and Connections: The Network Architecture

Artificial neural networks are not simply collections of individual neurons; they are organized into layers. A typical neural network consists of three types of layers:

Input Layer: This layer receives the initial data, representing the input features of the problem. For example, in an image recognition task, the input layer might represent the pixel values of an image.

Hidden Layers: These layers lie between the input and output layers. They perform complex transformations on the input data, extracting relevant features and patterns. Networks can have one or more hidden layers, with deeper networks generally capable of learning more complex patterns. The number of hidden layers and the number of neurons in each layer are crucial design choices that impact the network's performance.

Output Layer: This layer produces the final output of the network, representing the network's prediction or classification. The number of neurons in the output layer depends on the task. For example, a binary classification problem (e.g., spam detection) would have a single output neuron, while a multi-class classification problem (e.g., image recognition) would have multiple output neurons.

The connections between neurons define the network's architecture. These connections can be fully connected (each neuron in one layer is connected to every neuron in the next layer) or sparsely connected (only certain neurons are connected). The choice of architecture depends on the specific problem and the desired level of complexity.

Learning and Training: Adjusting the Weights

The power of neural networks lies in their ability to learn from data. This learning process involves adjusting the weights of the connections between neurons to minimize the difference between the network's predictions and the actual values. This is typically accomplished through a process called backpropagation, which uses gradient descent to iteratively adjust the weights.

The training process involves feeding the network with a large dataset of input-output pairs. The network makes predictions, and the difference between these predictions and the actual outputs is calculated as an error. Backpropagation then uses this error to adjust the weights, reducing the error over time. This process is repeated iteratively until the network achieves a satisfactory level of accuracy.

Different Types of Neural Networks

The basic principles discussed above form the foundation for a wide variety of neural network architectures. Some popular examples include:

Convolutional Neural Networks (CNNs): Specifically designed for processing grid-like data such as images and videos. They use convolutional layers to extract spatial features.

Recurrent Neural Networks (RNNs): Designed for processing sequential data such as text and time series. They have feedback connections that allow them to maintain a memory of previous inputs.

Long Short-Term Memory (LSTM) Networks: A type of RNN designed to address the vanishing gradient problem, allowing them to learn long-range dependencies in sequential data.

Applications and Future Directions

Neural networks have already revolutionized many fields, and their impact is only expected to grow. They are used in:

Image recognition and object detection: Powering applications like self-driving cars and facial recognition systems.

Natural language processing: Enabling chatbots, machine translation, and sentiment analysis.

Medical diagnosis: Assisting doctors in identifying diseases and predicting patient outcomes.

Financial modeling: Predicting market trends and managing risk.

Research into neural networks is constantly evolving, with new architectures and training techniques being developed. The future of neural networks holds immense potential for solving even more complex problems and driving further technological advancements. This field is dynamic and exciting, and understanding the fundamentals is the crucial first step in appreciating its power and potential.